Estimate HPC requirements

At the end of this class, you should be able to:

- Approximate the mesh size of a CFD simulation;

- Estimate the total number of time steps needed for a simulation;

- Estimate the memory and storage requirements of a simulation;

- Approximate the total computational cost of a simulation.

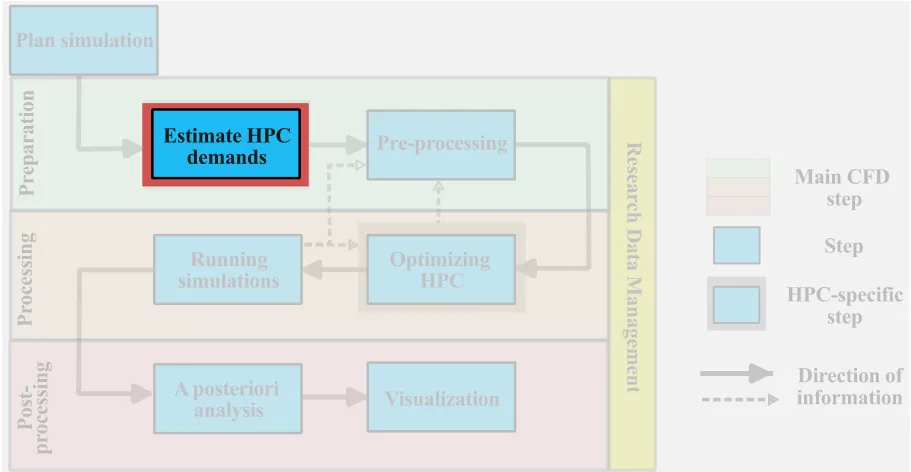

The objective of this section is to provide a systematic approach for an a priori estimation of the HPC cost of a large-scale CFD simulation. These estimates are meant to provide guidance prior to running simulations to better align the run(s) with the available computational resources. In this class, we will provide an approach to estimate:

- the computational mesh size;

- the time advancement needs;

- the storage and memory requirements.

Together, these estimates can provide an order of magnitude of the expected HPC costs. Each of these aspects will be discussed separately. Although these estimates are biased toward turbulent flows, the systematic approach can be used for other types of CFD simulations.

Mesh size estimation

The a priori estimation of the grid resolution requirement will help determine the anticipated computational expense of the simulation. These a priori estimates do not replace grid sensitivity studies that are necessary for verification of the CFD simulation results; instead, these estimates are meant to provide an order of magnitude idea of the degree of freedom of the problem to better plan the HPC resource allocation. These mesh estimations are based on a fully structured mesh, therefore, a carefully designed unstructured mesh may help to reduce the computational expense of a simulation. Thus, the current approach can be considered as conservative grid estimates.

The costs for a CFD campaign are driven by either of the following.

- large parametric space, thus a large number of smaller simulations;

- large computational cost per simulation due to the multiscale nature of the problem.

For parametric studies, an accurate a priori estimate of the grid point requirement is often not necessary. An iterative approach can be done on the individual simulation that is likely to be modest in size, given that the computational cost stems from the extent of the parametric space that is being investigated. Furthermore, the iterative grid size estimation is likely more accurate and effective than an a priori estimation. Thus, we consider the more complex problem of estimating the approximate grid point requirements for a large multiscale CFD simulation. The resolution requirement of these multiscale CFD problems will be driven by:

- resolution of near wall-bounded flows;

- large-gradients in the freestream;

- multiphase and multiphysics considerations;

- special flow features (transition, separation, shock waves);

- geometric complexity (or large scale separation) in the physical problem.

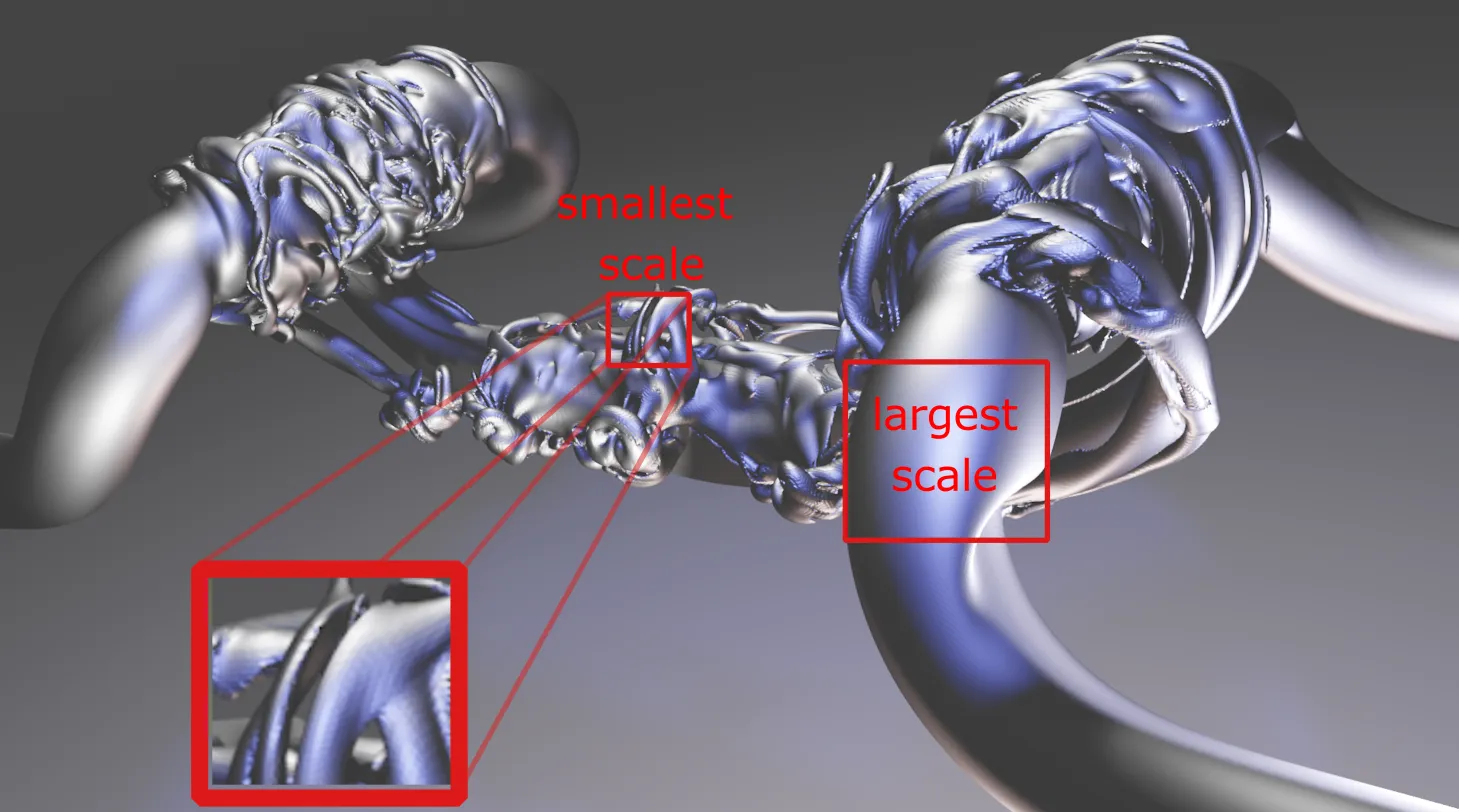

All these features driving the computational cost are tied to the multiscale nature (either in space or time) of the fluid problem. To illustrate the multiscale nature of a typical CFD simulation, we show a pair of reconnecting, antiparallel vortices. This figure highlights the necessity to resolve both the sharp gradient at the smallest scale as well as the largest scale of the problem, thus, we can see that we are rapidly bound by necessity to resolve the smallest and largest flow features. The largest scale must comfortably fit within the computational domain as discussed last lesson, whereas the resolution at the smallest scale is the focus in the following subsections.

Estimating the total computational cost of a numerical simulation is both science and art. An exact a priori knowledge of the amount of CPU hours required is a very hard task to accomplish, but one can follow a consistent and reliable strategy, which we outline below.

Grid estimate for wall bounded flows

The non-slip condition arising from the viscous nature of the fluid results in large velocity gradients close to solid walls. Thus, the overall mesh size will be strongly influenced by the near-wall grid requirements. The state of the boundary layers will also influence the grid count, therefore, we need to consider separately the resolution of the:

- laminar boundary layer;

- turbulent boundary layer;

- transitional boundary layer (later in this class).

Numerically, we need to resolve the large gradients near the wall, for this reason, we need more grid points at that location. Naturally, the resolution is directly influenced by the numerical scheme and modelling considerations used in the CFD code; thus, these estimates are meant to provide an order of magnitude of the expected grid requirements for a second-order finite-volume solver.

Grid estimate for laminar boundary layers

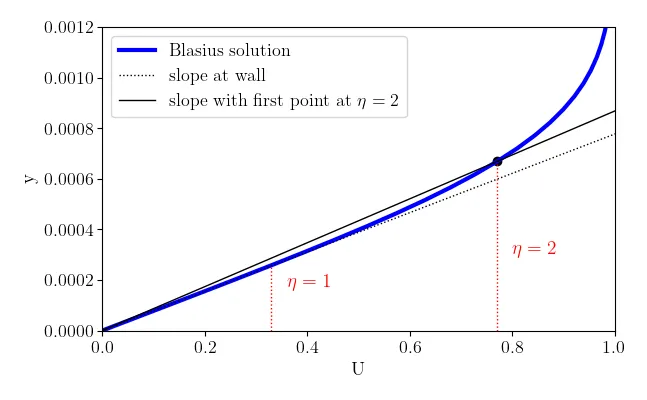

The zero-pressure gradient flat plate boundary layer has the distinct advantage of admitting an analytical solution of the velocity profile: the Blasius solution. The Blasius boundary layer is a self-similar solution, defined in terms of

A reasonable estimate would be to place the first grid point at

where

If we are interested in accurately capturing the thermal boundary layer (in a laminar flow), we know that:

Therefore, a laminar case with heat transfer would set the first grid point to meet both thermal and momentum conditions.

Let us work through an example.

Consider the very simple case of a zero-pressure gradient (

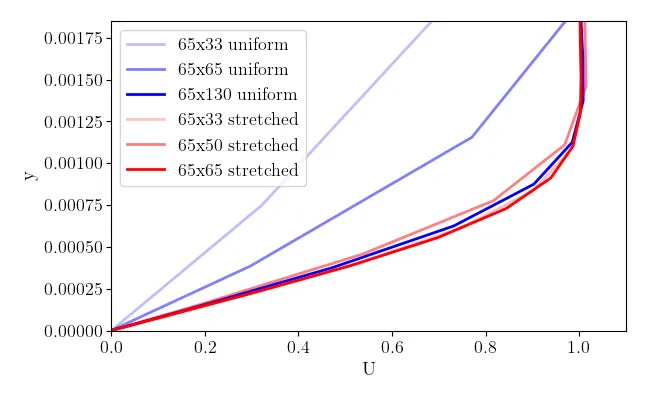

We note that stretched grids are essential to minimize the total required grid points (e.g. simulation at

Now that we have simulation results to work with, let us try to estimate the grid characteristics based on what we learned. Let us use the parameters of the simulation:

- density (

) - viscosity (

) - freestream velocity (

),

Here, we evaluated the boundary layer at

Now, let’s estimate the first grid point at the wall at

Based on this estimate, with a uniform mesh, this problem needs approximately 124 grid points in the wall normal direction (between

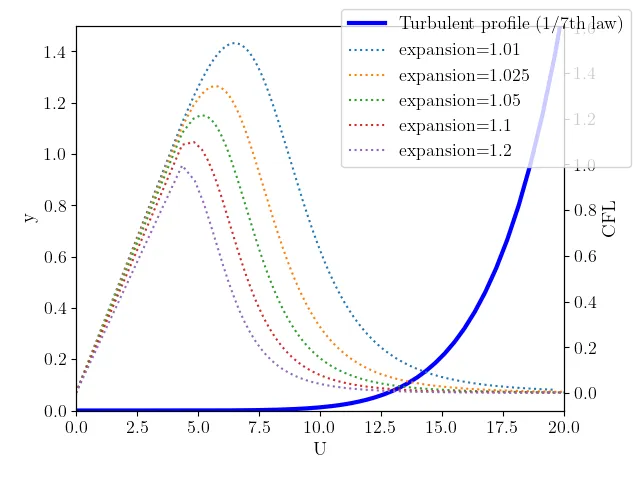

Now that we know the distance to the wall of the first point grid point, we can estimate the total grid points in the wall normal direction needed in the laminar boundary layer based on an acceptable expansion ratio of the mesh (typically below 1.1) to fill the domain in the

Thus, if the domain is

Based on these small calculations, we can estimate that we would probably need about

Assume that the same physical setup defined above except with a freestream velocity of only 35 m/s, calculate:

- position of the first grid point

at - estimate the total number of grid points (assuming a maximum aspect ratio of 20) for:

- a uniform mesh in the wall normal direction

- a stretched mesh with a stretching ratio of 1.05

Modify the input files of the simulations in the repository, re-run the simulation, and compare the grid resolution with the estimate grid points.

Grid estimate for turbulent boundary layers

As the estimation of grid requirements in a turbulent boundary layer builds on a fundamental understanding of turbulent flow, an optional summary of the main concepts in turbulence is provided below. These fundamental concepts of turbulence theory are the basis on which we can estimate the grid requirements in a turbulent boundary layer in the following.

Click here for further details

Turbulence

Turbulence is an ubiquitous state of fluid motion that affects our daily lives in many ways. On a macro-scale, turbulent flows govern weather changes and the formation and evolution of tropical cyclones; on a smaller scale, turbulence affects pollutants transport in the atmosphere or fluid flow in our body. From an engineering standpoint, almost every fluid system of practical interest involves turbulent flows (e.g. flows over bluff/blunt bodies, flows through ducts and pipes, and turbomachines).

The purpose of this section is to give students a general overview of the current state of numerical simulation of turbulent flows with the main goal of educating the audience to a systematic approach to the solution of complex fluid problems. This is far from a complete description of the physics and mathematical model of turbulence, to which entire textbooks have been dedicated over the years Pope (2000), Durbin et al. (2010).

What is turbulence?

Turbulence is a chaotic, irregular state of fluid motion in which the instabilities present in the flow, caused by initial and boundary conditions, are amplified (LES: theory and applications, Piomelli). This results in a self-sustaining cycle of generation and destruction of turbulent eddies (regions of high vorticity in the flow). Although chaotic in nature, every turbulent flow displays universal characteristics:

- Unsteadiness: turbulent flows are inherently unsteady. The instantaneous velocity in a turbulent flow when plotted as a function of time might look random to any observer unfamiliar with the topic. This randomness is the reason why turbulence research is based on statistical methods.

- Three-dimensional: turbulent flows are highly 3D, even though the flow might have once preferential direction and the resulting average velocity might be a function of only two coordinates, the instantaneous velocity fluctuates in all 3 spatial directions.

- Mixing: the presence of instantaneous fluctuations in all directions greatly amplifies the mixing of mass, momentum, and energy in the flow. Based on the application of interest enhanced mixing might be a positive outcome (e.g. internal combustion engines), or a negative one (e.g. increase in the skin-friction coefficient and increase in drag force).

- Vorticity: Vorticity is probably the most important and defining characteristic of turbulent flows. There are flows in nature that share some common characteristic of turbulence, but are characterized by negligible vorticity; these flows are not turbulent (e.g. random motion of waves on the ocean surface, potential flow over a boundary layer) (LES: theory and applications, Piomelli).

- Dissipative: Due to the enhanced mixing and vorticity, turbulence brings regions of different momentum (different velocities) into contact, resulting in the dumping of velocity gradients through the effect of viscosity. As the velocity gradient is reduced, so is the energy content of the flow (or turbulent kinetic energy). Turbulence is a dissipative process: if energy is not supplied to the flow, turbulence will eventually die. Throughout this process, energy is irreversibly transformed to heat.

- Multiscale: As mentioned earlier, turbulent flows are characterized by the presence of coherent regions of high vorticity, eddies. In any turbulent flow, eddies span a wide range of length and time scales. This property of turbulence directly impacts the numerical simulation of turbulent flows and should be given a bit more attention.

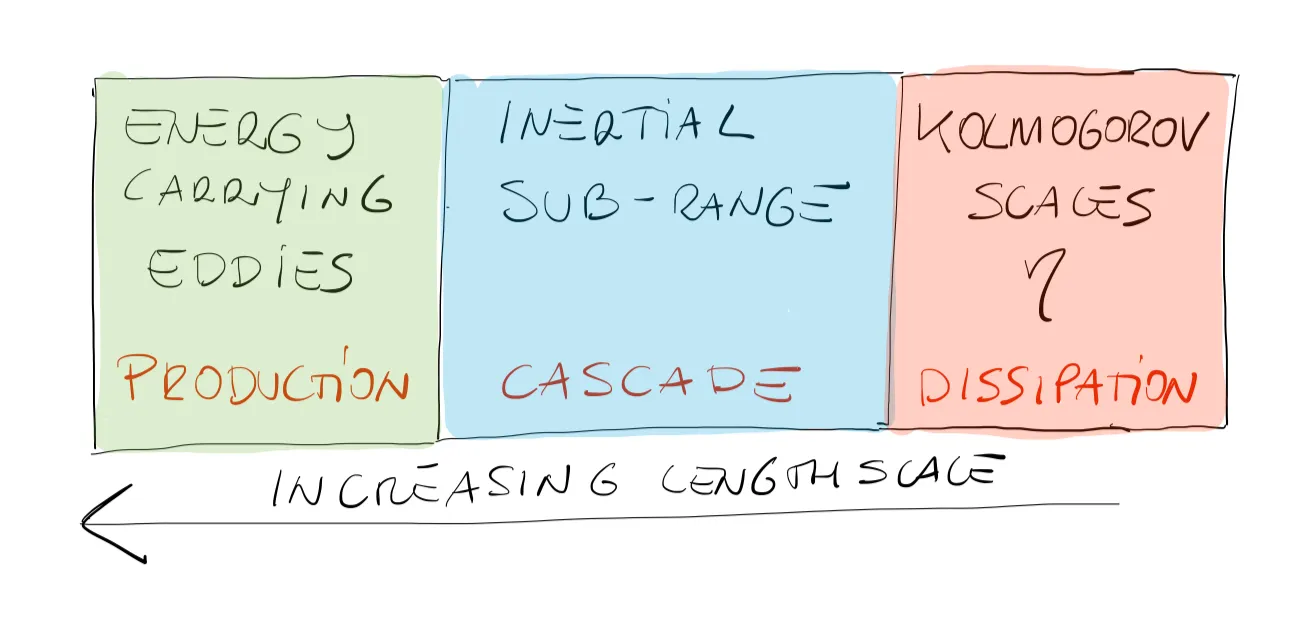

The scales of turbulence

Whether generated by perturbation in the initial condition or by rapid changes in geometry, turbulent flows are characterized by a wide distribution of eddies of various shapes and sizes. The behaviour of these eddies is strongly dependent on their length and velocity scales. Let’s consider, for instance, a high-Reynolds number flow with

where

Viscosity effects now become relevant, and energy is dissipated through viscous dissipation and irreversibly converted to heat. A visual sketch of this complicated process is shown in the figure below.

Important to keep in mind

- Energy is dissipated ONLY at the smallest scales.

- The rate of (how much) energy dissipation is set by the largest scales where production takes place.

- The intermediate scales only transfer energy from larger eddies to smaller eddies.

- As the Reynolds number increases, the separation between the large (integral) and small (dissipative) scales increases.

Numerical simulation of turbulent flows

Computational Fluid Dynamics (CFD) for the simulation of turbulent flows is becoming more and more popular as the available computational power of modern computers increases. In the following, we will overview the most common approaches followed in CFD, with the idea in mind that the numerical methods requirements greatly change based on what one wants to analyze in the flow.

-

The first and most straightforward approach is to directly discretize the equations of motion, and solve them numerically as done in the Poisson equation example in the previous section. This method is commonly referred to as Direct Numerical Simulation (DNS). This method aims at resolving EVERY scale of turbulent motion (integral to dissipative). Assuming that the mesh is fine enough to capture the smallest eddie (Kolmogorov scale), one will obtain a 3-dimensional time-dependent solution of the governing equation in which the only source of errors is the one introduced by the numerical methods Pope (2000).

-

The second very common approach to finding a numerical solution to turbulent flows is to decompose the equations of motion into a mean and a fluctuating components. This process is known as Reynolds’ averaging procedure, where the long-time average of a general quantity

is defined as , where is a time interval much larger than any time scale in the turbulent flow. Any instantaneous quantity in the flow, can therefore be taken as the sum of a mean and a fluctuating part, . If one applies the Reynolds decomposition to the equations of motion, one obtains the well-known Reynolds-Averaged Navier-Stokes (RANS) equations which describe the evolution of the mean (large-scale) quantities. Unfortunately, the resulting system of equations is not closed as the effect of the fluctuating component appears in the Reynolds stress term and requires the introduction of approximations (turbulence models). A very wide range of models for the Reynolds stresses exist, ranging from simple algebraic models, to more complex 2-equations models, to full Reynolds stresses closure models (LES: theory and applications, Piomelli).

- Direct Numerical Simulation (DNS). All scales of turbulence must be solved.

- Large-Eddy Simulation (LES). Only the large, energy-carrying eddies are resolved while smaller ones (smaller than a cutoff filter) are modelled using a full closure model. LESs can be Wall-Resolved (WRLES) or Wall-Modelled (WMLES) depending on how the near-wall region is treated.

- Reynolds-Averaged Navier-Stokes (RANS). The averaged equations for mean quantities are solved, while the Reynolds stresses term is modelled via algebraic one- or two-equations models.

Due to the combined effect of turbulent mixing near the wall and the non-slip condition at the wall, a larger velocity gradient will arise in the turbulent boundary layer -thus smaller grid spacing- compared to a laminar boundary layer of the same height. To assess the required grid resolution, we need to understand the self-similarity of the average turbulent velocity profile, thus the Law of the Wall. To this end, we can non-dimensionalize the velocity and distance with the characteristic quantities at the wall. The characteristic velocity at the wall is the friction velocity defined as:

which is a ratio of the wall shear stress

and

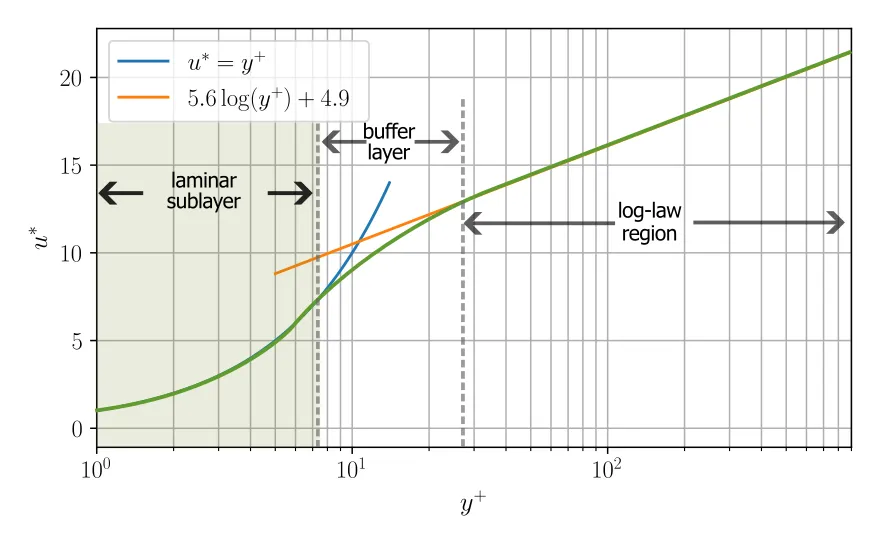

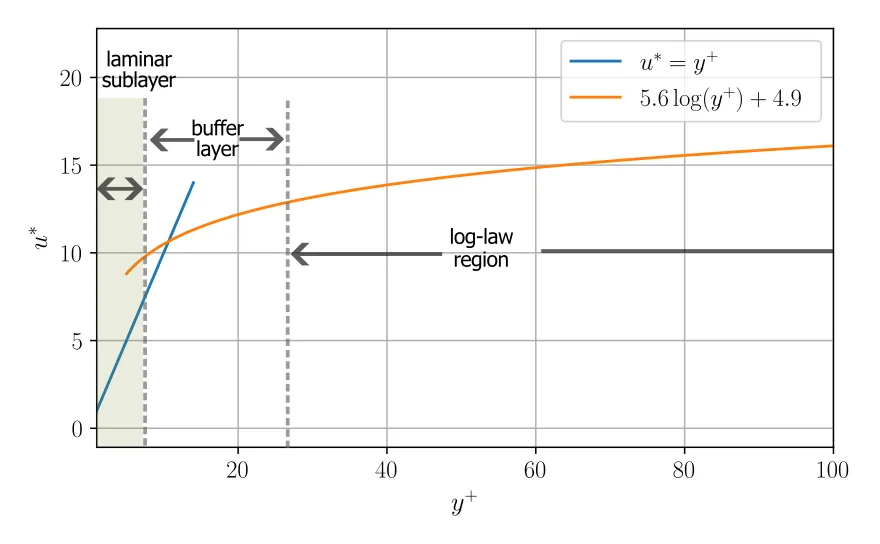

With these definitions, we can plot the typical velocity profiles of a zero-gradient turbulent (incompressible) boundary layer often referred to as the law of the wall (which formally refers to the logarithmic velocity profile, but often used to refer to the universality of the inner-scaled mean velocity profile). The near universality of the mean velocity profile (we note there are slightly different scalings for high-speed and non-adiabatic walls, for example) results in three distinct regions in the inner layer of the boundary:

- Viscous sublayer: primarily laminar region with linear relationship between

- Buffer layer: overlap region between the two regions

- Log-law region: showing a logarithmic relation between distance from the wall and velocity

The universal velocity profile is often shown in a semilog plot (below), the profile to determine the required first grid point is more insightful if we show in linear plot.

We observe that any mesh point placed in the laminar sublayer should give us the correct velocity gradient at the wall (as we know the non-slip condition at the wall, thus

To correctly resolve the near velocity profile, the first grid point needs to comfortably fall within the linear sublayer (

Given the linear profile in the viscous sublayer, some research propose to slightly loosen the

Wall-resolved simulations

Wall-resolved simulations seek to satisfy: a) the near wall first grid point requirement, as well as b) providing sufficient resolution in the boundary layer to resolve all the gradients. For wall-resolved RANS modelling, we can generally expand the grid with a ratio of up to 1.1, with a minimum of 10 grid points within the boundary layer thickness if the accuracy requirement is not too high, otherwise 30-40 cells in the wall normal direction would be advised.. As the turbulence is completely modelled in RANS, the grid requirement in the streamwise and streamwise directions is not as severe and the grid can have a significant aspect ratio:

In wall-resolved LES (more specifically, we are discussing wall-resolved LES or WRLES), only the large eddies must be resolved under the assumption that the dissipative scales (

As this is close to DNS cost, we often accept higher aspect ratio meshes for wall-resolved LES:

The estimation of grid resolution requirement in the outer boundary layer is characterized by a length scale proportional to the thickness of the boundary layer

We can use these results to provide a scaling for the required number of grid points for a WRLES. Under these conditions Choi and Moin (2012) estimated that the number required to resolve the inner layer in a WRLES is:

In DNS, all scales of motion, including the smallest dissipative scales (

Given this constraint, we typically have a much lower stretching ratio in the wall normal direction, which is taken below 1.025 but often around 1.01. The number of grid points required is proportional to the ratio

Wall functions or wall modelled simulations

The universality of the turbulent mean flow profile has motivated the development of wall functions and wall models to mitigate strict resolution requirements near the walls. These models help reduce the total grid points near the wall, which also greatly benefits the computational time advancement (discussed in the next section).

For RANS wall functions, the first grid point should be set within the log-law region, thus between

The wall-modelled LES (WMLES), can similarly reduce the near wall requirement by modelling the characteristics immediately adjacent to the wall. We recall that wall-resolved LES (WRLES) has a resolution that is nearly as strict as a DNS simulation! To estimate the grid point requirements in WMLES, we resort to the analysis by Choi and Moin (2012) that showed:

where

A summary of the scaling results is shown in the table below:

| Turbulence approach | |||

|---|---|---|---|

| RANS (wall-resolved) | 10-100 | N/A | |

| RANS (wall function) | 10-100 | N/A | |

| WMLES | 10-100 | ||

| WRLES | 4-20 | ||

| DNS | 1-6 |

These are meant to be orders of magnitude approximations of the grid count and will also depend on other parameters of the simulation.

Grid resolution in the freestream

Although the near wall mesh resolution greatly impacts the total number of grid points needed in a simulation, the resolution of other gradients in the freestream can greatly impact the total simulation costs.

For RANS simulations where we only need to resolve the mean flow gradients, mixing layers, jets, or wakes can be resolved with at least 10 grid points across the layer. For scale resolving simulations, such as LES, it is suggested that we should aim to have at least 20 points across the characteristic diameter of a jet/wake or in a shear layer. Unlike near the wall where there is a strong anisotropy which can justify a large aspect ratio, in the freestream, we should aim to greatly reduce the aspect ratio in LES such that

We can estimate the rate of energy dissipation

where

Multiphase, multiphysics and special flow features (transition, shock waves)

The generalization of the grid estimates to multiphase or multiphysics simulations is outside the scope of this course. In these cases, we need to resolve both the hydrodynamics of the problem in addition to the added grid requirements stemming from the multiphase or multiphysics aspect of the simulation. Therefore, a good starting point is to estimate the hydrodynamic grid requirement which can be corrected with a stricter resolution requirement arising from these phenomena.

Here, we explore the mesh considerations for three commonly encountered problems:

- Combustion

- Acoustics

- Shock waves

Each of these specific features are briefly discussed below.

Combustion

An increased grid requirement is needed near the reactive zone, as this region will be characterized by strong thermal and compositional gradients that should be captured. The actual number of grid points needed across the flame strongly depends on the modelling paradigm used. To obtain an estimate on the required number of grid points to fully resolve a laminar flame, one could run simplified one-dimensional test cases (using, for example, Cantera), for either a diffusion or premixed flame. By analyzing the sensitivity of the 1D simulation, we can estimate the grid point requirement across a flame.

In most engineering applications, combustion will rely on some modelling assumptions, especially when combustion arises in a turbulent regime. Premixed combustion is typically more computationally demanding than non-premixed combustion, as they are thin flames that are greatly influenced by the surrounding turbulence. A key feature of these flames is their laminar flame speed, which requires their flame structure to be resolved. Typical laminar premixed flames are usually smaller than 1 mm, which imposes a strict resolution requirement. Numerical techniques, such the thickened flame, can be used to loosen the strict resolution.

Acoustics

If we are interested in modelling the propagation of an acoustic wave in a fluid, we grid resolution and numerics play an important role. In addition to using low-dispersion and low-dissipation numerics, to propagate an acoustic wave on a discrete mesh, we need a minimal number of grid points per wavelength. The number of grid points needed per wavelength is highly dependent on the numerical scheme used in the simulation. To estimate the number of grid points per wavelength, a modified wavenumber analysis can be conducted. On the flip side, we can also use the modified wavenumber analysis to estimate the minimal frequency that can be resolved on a given grid. On the basis of these values, we can correct the overall grid estimate accordingly.

Shock waves

Shock waves are an important feature of high-speed flows that are characterized by a nearly discontinuous jump in the hydro- and thermodynamic properties of the flow. Due to the viscous and thermal diffusion in the fluid, the shock wave does have a very small but finite thickness. In a laminar shock, the thickness is approximately 10 mean free paths of the molecules. In a turbulent regime, the averaged thickness will be much larger than the purely laminar case, albeit often much smaller than the grid resolution in typical engineering simulations.

Given that most CFD simulations will never resolve the shock wave, shock-fitting techniques are often used for applied simulations. If one is interested in resolving the average shock thickness in a turbulent flow, a simple estimate of the characteristic shock thickness has been proposed by Lacombe et al.. This estimate can guide the resolution requirement across the shock.

Estimating time advancement

The time advancement of the CFD simulation has a significant impact on the total HPC costs. Starting from the initial condition (for an unsteady problem), the governing equations need be advanced by a time step,

- Stability of the temporal scheme (numerics);

- Characteristic time of the smallest resolved timescale (physics).

The stricter of the two time constraints will impose a limit on

Numerical timestep limitation

The stability of the time advancement scheme depends, first and foremost, on the numerics. Two general classes of numerical time advancement schemes can be defined:

- Implicit time advancement: unconditionally (most of the time) stable

- Explicit time advancement: conditionally stable

Although implicit methods have clear advantages in terms of numerical stability that allows the user to take very large time steps, they typically require preconditioners and are often difficult to get good parallel efficiency on large clusters. The use of implicit methods can result in degradation of the code scaling despite the larger time step. Explicit time advancement schemes, on the other hand, are very well suited for parallel computing, but these methods face numerical stability constraints based on the Courant–Friedrichs–Lewy condition (CFL). The CFL number is defined as:

where

- convective CFL limit: the most general condition tied to the local velocity of the fluid

- acoustic CFL limit: typically arises in compressible solver with wave propagation

The maximum CFL condition will arise at locations in the flow that have the largest local characteristic velocity and the smallest local mesh. Thus, the timestep limit often arises in the boundary layer or in the shear layers. If the mesh is well-constructed, the finest mesh arises in regions of large gradients; therefore, estimations of the allowable timestep will typically focus on identifying the locations in these regions with the highest CFL number. For incompressible simulations, the time advancement is bound by the convective limit, in other words

A similar analysis can be conducted in the laminar boundary layer velocity profile. If the code does not have the ability to use local timestepping (which allows for different timestep size at different spatial locations), the time step can be estimated a priori by:

- assume (or computing) the stability of the limit of the numerical scheme (typically

- based on the resolution requirement estimates, identify the location of maximum CFL, and estimate

- compute the total number of time steps,

Although the above approach is generalizable, some workers have proposed scaling analyses to estimate the number of timestep in a typical scale-resolving simulations (Yang and Griffin, 2021).

| Turbulence approach | Scaling |

|---|---|

| WMLES | |

| WRLES | |

| DNS |

In compressible simulations, the time advancement limit is more strongly constrained by the acoustic wave propagation speed (

Keep in mind that some flows may also be constrained by a viscous stability limit (not covered here).

Physical timestep limitation

Although the stability limit usually constrains the timestep, it is important to recognize that governing equations are integrated in time. We have no knowledge of the information contained within the time step (unless we have substeps), thus we need to make sure that the time advancement

In steady RANS, there is no physical timestep step limitation due to the steady-state nature of the solution. In unsteady RANS, the physical timescale would be tied to the well-defined large scale structured in the flow (either shedding frequency, oscillations etc.). For DNS, we need to rely on the smallest resolved timescale, which is the Kolmogorov timescale:

In LES, the smallest resolved timescale can vary greatly based on a number considerations. A first-order estimate, we can use the characteristic Taylor timescale that can be defined using as:

Although these physical timestep limitations may be relevant to compute, most of the time, the numerical stability of will often be the limiting factor in determining

Estimating memory and storage requirements

The memory used during CFD computation and the storage requirements (disk space) requirements are related concepts. Although the total memory requirements are code and numerics specific, the memory usage and storage will scale with the total number of grid points of the problem. Both of these points are discussed below.

Memory requirements

An order of magnitude estimate of the required memory will help determine the total amount of memory needed for a simulation (and thus the number of cores). As mentioned, the total memory depends greatly on many factors (numerics, codes, meshes etc), therefore a precise memory estimate is not feasible. Instead, we provide ways to estimate the memory needs of a simulation: (a) the minimal memory requirement, (b) a scaled memory approach.

Minimal memory requirement

The minimal memory requirement seeks to determine the minimal amount of memory required for the mesh and the variables necessary for the simulation. This value will certainly underestimate the true memory required but can provide a starting point for memory considerations.

Let us assume that all the integers and real values are stored on 64 bits (we are likely overestimating the integers but this simplification provides a simpler estimation). Therefore, we need to compute the total memory required for both the mesh and the data. Let’s consider that the mesh contains

Then we can estimate the data needed to store the transported variables in the CFD. If we have incompressible, single-phase RANS equations, we typically carry 4 hydrodynamic quantities (

The total memory is then:

This provides a lower-bound estimate of the total memory requirement.

Scaled memory approach

The minimal memory requirement approach is only a rough estimate of true memory usage. This is because different codes and different numerical methods will have different memory footprints. As an alternative approach, we can roughly assume that memory usage will scale with the number of grid points. This is a coarse, but reasonable estimate that can provide a more accurate memory estimation for very large computations.

To investigate the memory usage during runtime, we can use the function seff:

[user@gra-login1] seff 12361444

Job ID: 12361444Cluster: niagaraUser/Group: jphickey/jphickeyState: FAILEDNodes: 1Cores per node: 80CPU Utilized: 02:58:01CPU Efficiency: 32.09% of 09:14:40 core-walltimeJob Wall-clock time: 00:06:56Memory Utilized: 1.81 GBMemory Efficiency: 1.06% of 170.90 GBThis function can be run after the end of a simulation to reveal the memory characteristics. Therefore, if you hope to run a refined simulation with

This allows a bit better estimate of the memory requirement of a very large simulation.

Storage requirements

The total storage estimate can be assessed a priori based on the expected number of ‘snapshots’ (or three-dimensional output or restart files), the number of parameters stored, and the grid size. Saving simulations to disk can be done to: (1) restart the simulation, (2) archive the data, or (3) postprocess the simulation. We can estimate the total size of the snapshot in binary format. To do so, we need to consider the following:

- precision (single- or double-precision)

- mesh type (structured or unstructured)

- output format (e.g. vtk, silo, etc.)

- number of variables output.

Most of the real variables in modern CFD are double-precision, thus taking up 64-bit. Some software allow output to be written in 32-bit, which can be beneficial to the overall storage need without much loss in precision. Integers are also output, especially for unstructured mesh formats, to relate faces and grid points. These are usually stored as 32-bit integers, although for very large meshes, 64 bits may be needed, as 32-bits only allows 4,294,967,296 signed integers.

For practical reasons, we propose a simple approach to estimate the minimum size of a single snapshot of a structured mesh. Structured meshes have implicit connectivity between the mesh points, whereas unstructured meshes must also store the connectivity among each grid point. The present estimate represents the minimum size of the data file. First, we list all the variables that are to be output:

- coordinates (

- velocity (

- thermodynamics (

- multiphase or multiphysics

- any additional outputs (derivatives etc.)

Let us consider an incompressible code for which we need a restart file. To restart the simulation we need at least the coordinates (3), velocity (3), and pressure (1), or 7 variables. These need to be written for

where

# [user@gra-login1] diskusage_report Description Space # of files Home (username) 280 kB/47 GB 25/500k Scratch (username) 4096 B/18 TB 1/1000k Project (def-username-ab) 4096 B/9536 GB 2/500k Project (def-username) 4096 B/9536 GB 2/500kMaking sure that sufficient disk space is available is critical to avoid errors at runtime.

Computational cost of the simulation

On the basis of the above discussions, we found ways to estimate:

To estimate the total HPC cost, we need to know the approximate wall clock time per-time-step,

Let’s say, we plan to run a simulation with

Assuming a scaling efficiency of 80% when going up to 24 cores (discussed next class), we get:

The storage requirements are easy to assess based on the discussion in the previous subsection.

An a priori estimation of the HPC costs of the backward-facing step is undertaken. Let us first list out the characteristics of the simulation (from Jovic and Driver (1994)):

From these data, we can compute the viscosity of the fluid:

First, let us estimate the first grid point in this turbulent flow. We use the Schlichting skin-friction correlation:

From the friction coefficient, we can compute the wall shear stress as:

The friction velocity can now be estimated:

If we want a

Assuming that a grid stretching ratio of 1.025, from the bottom wall to the top wall of the domain (

Now, let us consider the number of grid points required in the streamwise direction. If we accept a stretching ratio of 25 (

Similarly, in the

Based on these estimates, we require about 186,600 grid points for an LES in this case without considering the shear layer.

Having finished this class, you should now be able to answer the following questions:

- What are the considerations to estimate the total mesh size of a simulation?

- How do I estimate the total time steps needed for a simulation?

- What are the various approaches to estimate the memory and storage requirements of a simulation?

- What parameters are involved in computing the total simulation costs?